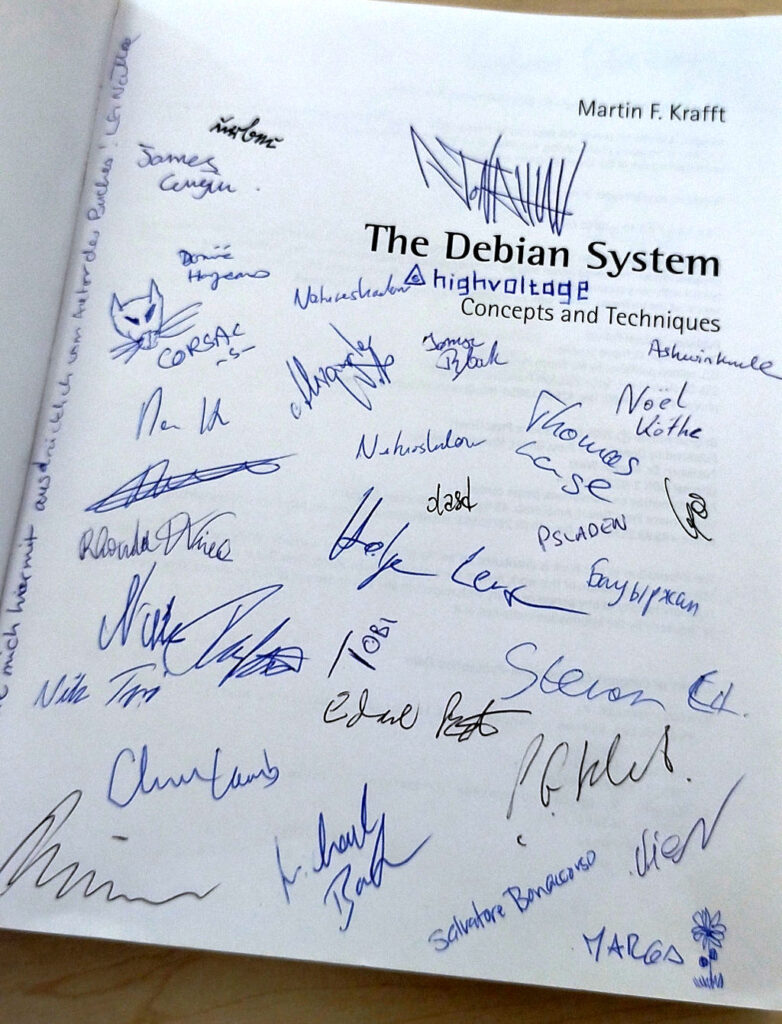

Andrew Cater: 202306101010 - Debian release preparations and boot media testing in Cambridge

Coffee and tea at the ready - bacon sandwiches are on the way

[And the build process is under way - and smcv has joined us]

Contributing to Debian

is part of Freexian s mission. This article

covers the latest achievements of Freexian and their collaborators. All of this

is made possible by organizations subscribing to our

Long Term Support contracts and

consulting services.

Contributing to Debian

is part of Freexian s mission. This article

covers the latest achievements of Freexian and their collaborators. All of this

is made possible by organizations subscribing to our

Long Term Support contracts and

consulting services.

Thank you to Holger for organising this event yet again!

B chel Tivoli light that didn t work

B chel Tivoli light that didn t work Cable for the throttle port

Cable for the throttle port Axa 606 E6-48 light

Axa 606 E6-48 light Axa Spark light

Axa Spark light Front light

Front light Rear light

Rear lightWelcome to the March 2023 report from the Reproducible Builds project.

In these reports we outline the most important things that we have been up to over the past month. As a quick recap, the motivation behind the reproducible builds effort is to ensure no malicious flaws have been introduced during compilation and distributing processes. It does this by ensuring identical results are always generated from a given source, thus allowing multiple third-parties to come to a consensus on whether a build was compromised.

If you are interested in contributing to the project, please do visit our Contribute page on our website.

In these reports we outline the most important things that we have been up to over the past month. As a quick recap, the motivation behind the reproducible builds effort is to ensure no malicious flaws have been introduced during compilation and distributing processes. It does this by ensuring identical results are always generated from a given source, thus allowing multiple third-parties to come to a consensus on whether a build was compromised.

If you are interested in contributing to the project, please do visit our Contribute page on our website.

There was progress towards making the Go programming language reproducible this month, with the overall goal remaining making the Go binaries distributed from Google and by Arch Linux (and others) to be bit-for-bit identical. These changes could become part of the upcoming version 1.21 release of Go. An issue in the Go issue tracker (#57120) is being used to follow and record progress on this.

There was progress towards making the Go programming language reproducible this month, with the overall goal remaining making the Go binaries distributed from Google and by Arch Linux (and others) to be bit-for-bit identical. These changes could become part of the upcoming version 1.21 release of Go. An issue in the Go issue tracker (#57120) is being used to follow and record progress on this.

Intel published a guide on how to reproducibly build their Trust Domain Extensions (TDX) firmware. TDX here refers to an Intel technology that combines their existing virtual machine and memory encryption technology with a new kind of virtual machine guest called a Trust Domain. This runs the CPU in a mode that protects the confidentiality of its memory contents and its state from any other software.

Intel published a guide on how to reproducibly build their Trust Domain Extensions (TDX) firmware. TDX here refers to an Intel technology that combines their existing virtual machine and memory encryption technology with a new kind of virtual machine guest called a Trust Domain. This runs the CPU in a mode that protects the confidentiality of its memory contents and its state from any other software.

as frontend, the -ffile-prefix-map was being ignored. We were tracking this in Debian via the build_path_captured_in_assembly_objects issue. It has now been fixed and will be reflected in GCC version 13.

Holger Levsen will present at foss-north 2023 in April of this year in Gothenburg, Sweden on the topic of Reproducible Builds, the first ten years.

Holger Levsen will present at foss-north 2023 in April of this year in Gothenburg, Sweden on the topic of Reproducible Builds, the first ten years.

Software Supply Chain Attacks (SSCAs) typically compromise hosts through trusted but infected software. The intent of this paper is twofold: First, we present an empirical study of the most prominent software supply chain attacks and their characteristics. Second, we propose an investigative framework for identifying, expressing, and evaluating characteristic behaviours of newfound attacks for mitigation and future defense purposes. We hypothesize that these behaviours are statistically malicious, existed in the past, and thus could have been thwarted in modernity through their cementation x-years ago. [ ]

#reproducible-builds on the OFTC network.

and as a Valentines Day present, Holger Levsen wrote on his blog on 14th February to express his thanks to OSUOSL for their continuous support of reproducible-builds.org. [ ]

and as a Valentines Day present, Holger Levsen wrote on his blog on 14th February to express his thanks to OSUOSL for their continuous support of reproducible-builds.org. [ ]

Vagrant Cascadian developed an easier setup for testing debian packages which uses sbuild s unshare mode along and reprotest, our tool for building the same source code twice in different environments and then checking the binaries produced by each build for any differences. [ ]

Vagrant Cascadian developed an easier setup for testing debian packages which uses sbuild s unshare mode along and reprotest, our tool for building the same source code twice in different environments and then checking the binaries produced by each build for any differences. [ ]

build_path_captured_in_assembly_objects to note that it has been fixed for GCC 13 [ ] and Vagrant Cascadian added new issues to mark packages where the build path is being captured via the Rust toolchain [ ] as well as new categorisation for where virtual packages have nondeterministic versioned dependencies [ ].

cockpit (gzip mtime)crmsh (by mcepl: rewrite to avoid python toolchain issue)cx_Freeze (merged, FTBFS-2038)golangci-lint (date)guestfs-tools (gzip mtime)perf (merged, sort python scandir)perl-Date-Calc-XS (FTBFS-2038)perl-Date-Calc (FTBFS-2038)pw3270 (merged, date)python-dtaidistance (drop unreproducible unnecessary file)sonic-pi (FTBFS-2038)spack (parallelism)tesseract (fixed, CPU, -march=native)esda.gle-graphics-manual.transfig/fig2dev (also in openSUSE ; date in PDF)SOURCE_DATE_EPOCH environment variable.

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In March, the following changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In March, the following changes were made by Holger Levsen:

megacli packages that are needed for hardware RAID. [ ][ ]/srv/workspace directory is owned by by the jenkins user. [ ].debian.net names everywhere, except when communicating with the outside world. [ ]docker group from the janitor_setup_worker script to the (more general) update_jdn.sh script. [ ]live-build images. [ ] diffoscope is our in-depth and content-aware diff utility. Not only can it locate and diagnose reproducibility issues, it can provide human-readable diffs from many kinds of binary formats as well. This month, Mattia Rizzolo released versions

diffoscope is our in-depth and content-aware diff utility. Not only can it locate and diagnose reproducibility issues, it can provide human-readable diffs from many kinds of binary formats as well. This month, Mattia Rizzolo released versions 238, and Chris Lamb released versions 239 and 240. Chris Lamb also made the following changes:

include_package_data=True [ ], fixed the build under Debian bullseye [ ], fixed tool name in a list of tools permitted to be absent during package build tests [ ] and as well as documented sending out an email upon [ ].

In addition, Vagrant Cascadian updated the version of GNU Guix to 238 [ and 239 [ ]. Vagrant also updated reprotest to version 0.7.23. [ ]

Bernhard M. Wiedemann published another monthly report about reproducibility within openSUSE

Bernhard M. Wiedemann published another monthly report about reproducibility within openSUSE

#reproducible-builds on irc.oftc.net.

rb-general@lists.reproducible-builds.org

Nitrokey Start

Nitrokey Start$ sha256sum python3-pip*

ded6b3867a4a4cbaff0940cab366975d6aeecc76b9f2d2efa3deceb062668b1c python3-pip_22.0.2+dfsg-1ubuntu0.2_all.deb

e1561575130c41dc3309023a345de337e84b4b04c21c74db57f599e267114325 python3-pip-whl_22.0.2+dfsg-1ubuntu0.2_all.deb

$ doas dpkg -i python3-pip*

...

$ doas apt install -f

...

$jas@kaka:~$ pip3 install --user pynitrokey Collecting pynitrokey Downloading pynitrokey-0.4.34-py3-none-any.whl (572 kB) Collecting frozendict~=2.3.4 Downloading frozendict-2.3.5-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (113 kB) Requirement already satisfied: click<9,>=8.0.0 in /usr/lib/python3/dist-packages (from pynitrokey) (8.0.3) Collecting ecdsa Downloading ecdsa-0.18.0-py2.py3-none-any.whl (142 kB) Collecting python-dateutil~=2.7.0 Downloading python_dateutil-2.7.5-py2.py3-none-any.whl (225 kB) Collecting fido2<2,>=1.1.0 Downloading fido2-1.1.0-py3-none-any.whl (201 kB) Collecting tlv8 Downloading tlv8-0.10.0.tar.gz (16 kB) Preparing metadata (setup.py) ... done Requirement already satisfied: certifi>=14.5.14 in /usr/lib/python3/dist-packages (from pynitrokey) (2020.6.20) Requirement already satisfied: pyusb in /usr/lib/python3/dist-packages (from pynitrokey) (1.2.1.post1) Collecting urllib3~=1.26.7 Downloading urllib3-1.26.15-py2.py3-none-any.whl (140 kB) Collecting spsdk<1.8.0,>=1.7.0 Downloading spsdk-1.7.1-py3-none-any.whl (684 kB) Collecting typing_extensions~=4.3.0 Downloading typing_extensions-4.3.0-py3-none-any.whl (25 kB) Requirement already satisfied: cryptography<37,>=3.4.4 in /usr/lib/python3/dist-packages (from pynitrokey) (3.4.8) Collecting intelhex Downloading intelhex-2.3.0-py2.py3-none-any.whl (50 kB) Collecting nkdfu Downloading nkdfu-0.2-py3-none-any.whl (16 kB) Requirement already satisfied: requests in /usr/lib/python3/dist-packages (from pynitrokey) (2.25.1) Collecting tqdm Downloading tqdm-4.65.0-py3-none-any.whl (77 kB) Collecting nrfutil<7,>=6.1.4 Downloading nrfutil-6.1.7.tar.gz (845 kB) Preparing metadata (setup.py) ... done Requirement already satisfied: cffi in /usr/lib/python3/dist-packages (from pynitrokey) (1.15.0) Collecting crcmod Downloading crcmod-1.7.tar.gz (89 kB) Preparing metadata (setup.py) ... done Collecting libusb1==1.9.3 Downloading libusb1-1.9.3-py3-none-any.whl (60 kB) Collecting pc_ble_driver_py>=0.16.4 Downloading pc_ble_driver_py-0.17.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (2.9 MB) Collecting piccata Downloading piccata-2.0.3-py3-none-any.whl (21 kB) Collecting protobuf<4.0.0,>=3.17.3 Downloading protobuf-3.20.3-cp310-cp310-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (1.1 MB) Collecting pyserial Downloading pyserial-3.5-py2.py3-none-any.whl (90 kB) Collecting pyspinel>=1.0.0a3 Downloading pyspinel-1.0.3.tar.gz (58 kB) Preparing metadata (setup.py) ... done Requirement already satisfied: pyyaml in /usr/lib/python3/dist-packages (from nrfutil<7,>=6.1.4->pynitrokey) (5.4.1) Requirement already satisfied: six>=1.5 in /usr/lib/python3/dist-packages (from python-dateutil~=2.7.0->pynitrokey) (1.16.0) Collecting pylink-square<0.11.9,>=0.8.2 Downloading pylink_square-0.11.1-py2.py3-none-any.whl (78 kB) Collecting jinja2<3.1,>=2.11 Downloading Jinja2-3.0.3-py3-none-any.whl (133 kB) Collecting bincopy<17.11,>=17.10.2 Downloading bincopy-17.10.3-py3-none-any.whl (17 kB) Collecting fastjsonschema>=2.15.1 Downloading fastjsonschema-2.16.3-py3-none-any.whl (23 kB) Collecting astunparse<2,>=1.6 Downloading astunparse-1.6.3-py2.py3-none-any.whl (12 kB) Collecting oscrypto~=1.2 Downloading oscrypto-1.3.0-py2.py3-none-any.whl (194 kB) Collecting deepmerge==0.3.0 Downloading deepmerge-0.3.0-py2.py3-none-any.whl (7.6 kB) Collecting pyocd<=0.31.0,>=0.28.3 Downloading pyocd-0.31.0-py3-none-any.whl (12.5 MB) Collecting click-option-group<0.6,>=0.3.0 Downloading click_option_group-0.5.5-py3-none-any.whl (12 kB) Collecting pycryptodome<4,>=3.9.3 Downloading pycryptodome-3.17-cp35-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (2.1 MB) Collecting pyocd-pemicro<1.2.0,>=1.1.1 Downloading pyocd_pemicro-1.1.5-py3-none-any.whl (9.0 kB) Requirement already satisfied: colorama<1,>=0.4.4 in /usr/lib/python3/dist-packages (from spsdk<1.8.0,>=1.7.0->pynitrokey) (0.4.4) Collecting commentjson<1,>=0.9 Downloading commentjson-0.9.0.tar.gz (8.7 kB) Preparing metadata (setup.py) ... done Requirement already satisfied: asn1crypto<2,>=1.2 in /usr/lib/python3/dist-packages (from spsdk<1.8.0,>=1.7.0->pynitrokey) (1.4.0) Collecting pypemicro<0.2.0,>=0.1.9 Downloading pypemicro-0.1.11-py3-none-any.whl (5.7 MB) Collecting libusbsio>=2.1.11 Downloading libusbsio-2.1.11-py3-none-any.whl (247 kB) Collecting sly==0.4 Downloading sly-0.4.tar.gz (60 kB) Preparing metadata (setup.py) ... done Collecting ruamel.yaml<0.18.0,>=0.17 Downloading ruamel.yaml-0.17.21-py3-none-any.whl (109 kB) Collecting cmsis-pack-manager<0.3.0 Downloading cmsis_pack_manager-0.2.10-py2.py3-none-manylinux1_x86_64.whl (25.1 MB) Collecting click-command-tree==1.1.0 Downloading click_command_tree-1.1.0-py3-none-any.whl (3.6 kB) Requirement already satisfied: bitstring<3.2,>=3.1 in /usr/lib/python3/dist-packages (from spsdk<1.8.0,>=1.7.0->pynitrokey) (3.1.7) Collecting hexdump~=3.3 Downloading hexdump-3.3.zip (12 kB) Preparing metadata (setup.py) ... done Collecting fire Downloading fire-0.5.0.tar.gz (88 kB) Preparing metadata (setup.py) ... done Requirement already satisfied: wheel<1.0,>=0.23.0 in /usr/lib/python3/dist-packages (from astunparse<2,>=1.6->spsdk<1.8.0,>=1.7.0->pynitrokey) (0.37.1) Collecting humanfriendly Downloading humanfriendly-10.0-py2.py3-none-any.whl (86 kB) Collecting argparse-addons>=0.4.0 Downloading argparse_addons-0.12.0-py3-none-any.whl (3.3 kB) Collecting pyelftools Downloading pyelftools-0.29-py2.py3-none-any.whl (174 kB) Collecting milksnake>=0.1.2 Downloading milksnake-0.1.5-py2.py3-none-any.whl (9.6 kB) Requirement already satisfied: appdirs>=1.4 in /usr/lib/python3/dist-packages (from cmsis-pack-manager<0.3.0->spsdk<1.8.0,>=1.7.0->pynitrokey) (1.4.4) Collecting lark-parser<0.8.0,>=0.7.1 Downloading lark-parser-0.7.8.tar.gz (276 kB) Preparing metadata (setup.py) ... done Requirement already satisfied: MarkupSafe>=2.0 in /usr/lib/python3/dist-packages (from jinja2<3.1,>=2.11->spsdk<1.8.0,>=1.7.0->pynitrokey) (2.0.1) Collecting asn1crypto<2,>=1.2 Downloading asn1crypto-1.5.1-py2.py3-none-any.whl (105 kB) Collecting wrapt Downloading wrapt-1.15.0-cp310-cp310-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl (78 kB) Collecting future Downloading future-0.18.3.tar.gz (840 kB) Preparing metadata (setup.py) ... done Collecting psutil>=5.2.2 Downloading psutil-5.9.4-cp36-abi3-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl (280 kB) Collecting capstone<5.0,>=4.0 Downloading capstone-4.0.2-py2.py3-none-manylinux1_x86_64.whl (2.1 MB) Collecting naturalsort<2.0,>=1.5 Downloading naturalsort-1.5.1.tar.gz (7.4 kB) Preparing metadata (setup.py) ... done Collecting prettytable<3.0,>=2.0 Downloading prettytable-2.5.0-py3-none-any.whl (24 kB) Collecting intervaltree<4.0,>=3.0.2 Downloading intervaltree-3.1.0.tar.gz (32 kB) Preparing metadata (setup.py) ... done Collecting ruamel.yaml.clib>=0.2.6 Downloading ruamel.yaml.clib-0.2.7-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.manylinux_2_24_x86_64.whl (485 kB) Collecting termcolor Downloading termcolor-2.2.0-py3-none-any.whl (6.6 kB) Collecting sortedcontainers<3.0,>=2.0 Downloading sortedcontainers-2.4.0-py2.py3-none-any.whl (29 kB) Requirement already satisfied: wcwidth in /usr/lib/python3/dist-packages (from prettytable<3.0,>=2.0->pyocd<=0.31.0,>=0.28.3->spsdk<1.8.0,>=1.7.0->pynitrokey) (0.2.5) Building wheels for collected packages: nrfutil, crcmod, sly, tlv8, commentjson, hexdump, pyspinel, fire, intervaltree, lark-parser, naturalsort, future Building wheel for nrfutil (setup.py) ... done Created wheel for nrfutil: filename=nrfutil-6.1.7-py3-none-any.whl size=898520 sha256=de6f8803f51d6c26d24dc7df6292064a468ff3f389d73370433fde5582b84a10 Stored in directory: /home/jas/.cache/pip/wheels/39/2b/9b/98ab2dd716da746290e6728bdb557b14c1c9a54cb9ed86e13b Building wheel for crcmod (setup.py) ... done Created wheel for crcmod: filename=crcmod-1.7-cp310-cp310-linux_x86_64.whl size=31422 sha256=5149ac56fcbfa0606760eef5220fcedc66be560adf68cf38c604af3ad0e4a8b0 Stored in directory: /home/jas/.cache/pip/wheels/85/4c/07/72215c529bd59d67e3dac29711d7aba1b692f543c808ba9e86 Building wheel for sly (setup.py) ... done Created wheel for sly: filename=sly-0.4-py3-none-any.whl size=27352 sha256=f614e413918de45c73d1e9a8dca61ca07dc760d9740553400efc234c891f7fde Stored in directory: /home/jas/.cache/pip/wheels/a2/23/4a/6a84282a0d2c29f003012dc565b3126e427972e8b8157ea51f Building wheel for tlv8 (setup.py) ... done Created wheel for tlv8: filename=tlv8-0.10.0-py3-none-any.whl size=11266 sha256=3ec8b3c45977a3addbc66b7b99e1d81b146607c3a269502b9b5651900a0e2d08 Stored in directory: /home/jas/.cache/pip/wheels/e9/35/86/66a473cc2abb0c7f21ed39c30a3b2219b16bd2cdb4b33cfc2c Building wheel for commentjson (setup.py) ... done Created wheel for commentjson: filename=commentjson-0.9.0-py3-none-any.whl size=12092 sha256=28b6413132d6d7798a18cf8c76885dc69f676ea763ffcb08775a3c2c43444f4a Stored in directory: /home/jas/.cache/pip/wheels/7d/90/23/6358a234ca5b4ec0866d447079b97fedf9883387d1d7d074e5 Building wheel for hexdump (setup.py) ... done Created wheel for hexdump: filename=hexdump-3.3-py3-none-any.whl size=8913 sha256=79dfadd42edbc9acaeac1987464f2df4053784fff18b96408c1309b74fd09f50 Stored in directory: /home/jas/.cache/pip/wheels/26/28/f7/f47d7ecd9ae44c4457e72c8bb617ef18ab332ee2b2a1047e87 Building wheel for pyspinel (setup.py) ... done Created wheel for pyspinel: filename=pyspinel-1.0.3-py3-none-any.whl size=65033 sha256=01dc27f81f28b4830a0cf2336dc737ef309a1287fcf33f57a8a4c5bed3b5f0a6 Stored in directory: /home/jas/.cache/pip/wheels/95/ec/4b/6e3e2ee18e7292d26a65659f75d07411a6e69158bb05507590 Building wheel for fire (setup.py) ... done Created wheel for fire: filename=fire-0.5.0-py2.py3-none-any.whl size=116951 sha256=3d288585478c91a6914629eb739ea789828eb2d0267febc7c5390cb24ba153e8 Stored in directory: /home/jas/.cache/pip/wheels/90/d4/f7/9404e5db0116bd4d43e5666eaa3e70ab53723e1e3ea40c9a95 Building wheel for intervaltree (setup.py) ... done Created wheel for intervaltree: filename=intervaltree-3.1.0-py2.py3-none-any.whl size=26119 sha256=5ff1def22ba883af25c90d90ef7c6518496fcd47dd2cbc53a57ec04cd60dc21d Stored in directory: /home/jas/.cache/pip/wheels/fa/80/8c/43488a924a046b733b64de3fac99252674c892a4c3801c0a61 Building wheel for lark-parser (setup.py) ... done Created wheel for lark-parser: filename=lark_parser-0.7.8-py2.py3-none-any.whl size=62527 sha256=3d2ec1d0f926fc2688d40777f7ef93c9986f874169132b1af590b6afc038f4be Stored in directory: /home/jas/.cache/pip/wheels/29/30/94/33e8b58318aa05cb1842b365843036e0280af5983abb966b83 Building wheel for naturalsort (setup.py) ... done Created wheel for naturalsort: filename=naturalsort-1.5.1-py3-none-any.whl size=7526 sha256=bdecac4a49f2416924548cae6c124c85d5333e9e61c563232678ed182969d453 Stored in directory: /home/jas/.cache/pip/wheels/a6/8e/c9/98cfa614fff2979b457fa2d9ad45ec85fa417e7e3e2e43be51 Building wheel for future (setup.py) ... done Created wheel for future: filename=future-0.18.3-py3-none-any.whl size=492037 sha256=57a01e68feca2b5563f5f624141267f399082d2f05f55886f71b5d6e6cf2b02c Stored in directory: /home/jas/.cache/pip/wheels/5e/a9/47/f118e66afd12240e4662752cc22cefae5d97275623aa8ef57d Successfully built nrfutil crcmod sly tlv8 commentjson hexdump pyspinel fire intervaltree lark-parser naturalsort future Installing collected packages: tlv8, sortedcontainers, sly, pyserial, pyelftools, piccata, naturalsort, libusb1, lark-parser, intelhex, hexdump, fastjsonschema, crcmod, asn1crypto, wrapt, urllib3, typing_extensions, tqdm, termcolor, ruamel.yaml.clib, python-dateutil, pyspinel, pypemicro, pycryptodome, psutil, protobuf, prettytable, oscrypto, milksnake, libusbsio, jinja2, intervaltree, humanfriendly, future, frozendict, fido2, ecdsa, deepmerge, commentjson, click-option-group, click-command-tree, capstone, astunparse, argparse-addons, ruamel.yaml, pyocd-pemicro, pylink-square, pc_ble_driver_py, fire, cmsis-pack-manager, bincopy, pyocd, nrfutil, nkdfu, spsdk, pynitrokey WARNING: The script nitropy is installed in '/home/jas/.local/bin' which is not on PATH. Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location. Successfully installed argparse-addons-0.12.0 asn1crypto-1.5.1 astunparse-1.6.3 bincopy-17.10.3 capstone-4.0.2 click-command-tree-1.1.0 click-option-group-0.5.5 cmsis-pack-manager-0.2.10 commentjson-0.9.0 crcmod-1.7 deepmerge-0.3.0 ecdsa-0.18.0 fastjsonschema-2.16.3 fido2-1.1.0 fire-0.5.0 frozendict-2.3.5 future-0.18.3 hexdump-3.3 humanfriendly-10.0 intelhex-2.3.0 intervaltree-3.1.0 jinja2-3.0.3 lark-parser-0.7.8 libusb1-1.9.3 libusbsio-2.1.11 milksnake-0.1.5 naturalsort-1.5.1 nkdfu-0.2 nrfutil-6.1.7 oscrypto-1.3.0 pc_ble_driver_py-0.17.0 piccata-2.0.3 prettytable-2.5.0 protobuf-3.20.3 psutil-5.9.4 pycryptodome-3.17 pyelftools-0.29 pylink-square-0.11.1 pynitrokey-0.4.34 pyocd-0.31.0 pyocd-pemicro-1.1.5 pypemicro-0.1.11 pyserial-3.5 pyspinel-1.0.3 python-dateutil-2.7.5 ruamel.yaml-0.17.21 ruamel.yaml.clib-0.2.7 sly-0.4 sortedcontainers-2.4.0 spsdk-1.7.1 termcolor-2.2.0 tlv8-0.10.0 tqdm-4.65.0 typing_extensions-4.3.0 urllib3-1.26.15 wrapt-1.15.0 jas@kaka:~$Then upgrading the device worked remarkable well, although I wish that the tool would have printed URLs and checksums for the firmware files to allow easy confirmation.

jas@kaka:~$ PATH=$PATH:/home/jas/.local/bin

jas@kaka:~$ nitropy start list

Command line tool to interact with Nitrokey devices 0.4.34

:: 'Nitrokey Start' keys:

FSIJ-1.2.15-5D271572: Nitrokey Nitrokey Start (RTM.12.1-RC2-modified)

jas@kaka:~$ nitropy start update

Command line tool to interact with Nitrokey devices 0.4.34

Nitrokey Start firmware update tool

Platform: Linux-5.15.0-67-generic-x86_64-with-glibc2.35

System: Linux, is_linux: True

Python: 3.10.6

Saving run log to: /tmp/nitropy.log.gc5753a8

Admin PIN:

Firmware data to be used:

- FirmwareType.REGNUAL: 4408, hash: ...b'72a30389' valid (from ...built/RTM.13/regnual.bin)

- FirmwareType.GNUK: 129024, hash: ...b'25a4289b' valid (from ...prebuilt/RTM.13/gnuk.bin)

Currently connected device strings:

Device:

Vendor: Nitrokey

Product: Nitrokey Start

Serial: FSIJ-1.2.15-5D271572

Revision: RTM.12.1-RC2-modified

Config: *:*:8e82

Sys: 3.0

Board: NITROKEY-START-G

initial device strings: [ 'name': '', 'Vendor': 'Nitrokey', 'Product': 'Nitrokey Start', 'Serial': 'FSIJ-1.2.15-5D271572', 'Revision': 'RTM.12.1-RC2-modified', 'Config': '*:*:8e82', 'Sys': '3.0', 'Board': 'NITROKEY-START-G' ]

Please note:

- Latest firmware available is:

RTM.13 (published: 2022-12-08T10:59:11Z)

- provided firmware: None

- all data will be removed from the device!

- do not interrupt update process - the device may not run properly!

- the process should not take more than 1 minute

Do you want to continue? [yes/no]: yes

...

Starting bootloader upload procedure

Device: Nitrokey Start FSIJ-1.2.15-5D271572

Connected to the device

Running update!

Do NOT remove the device from the USB slot, until further notice

Downloading flash upgrade program...

Executing flash upgrade...

Waiting for device to appear:

Wait 20 seconds.....

Downloading the program

Protecting device

Finish flashing

Resetting device

Update procedure finished. Device could be removed from USB slot.

Currently connected device strings (after upgrade):

Device:

Vendor: Nitrokey

Product: Nitrokey Start

Serial: FSIJ-1.2.19-5D271572

Revision: RTM.13

Config: *:*:8e82

Sys: 3.0

Board: NITROKEY-START-G

device can now be safely removed from the USB slot

final device strings: [ 'name': '', 'Vendor': 'Nitrokey', 'Product': 'Nitrokey Start', 'Serial': 'FSIJ-1.2.19-5D271572', 'Revision': 'RTM.13', 'Config': '*:*:8e82', 'Sys': '3.0', 'Board': 'NITROKEY-START-G' ]

finishing session 2023-03-16 21:49:07.371291

Log saved to: /tmp/nitropy.log.gc5753a8

jas@kaka:~$

jas@kaka:~$ nitropy start list

Command line tool to interact with Nitrokey devices 0.4.34

:: 'Nitrokey Start' keys:

FSIJ-1.2.19-5D271572: Nitrokey Nitrokey Start (RTM.13)

jas@kaka:~$

Before importing the master key to this device, it should be configured. Note the commands in the beginning to make sure scdaemon/pcscd is not running because they may have cached state from earlier cards. Change PIN code as you like after this, my experience with Gnuk was that the Admin PIN had to be changed first, then you import the key, and then you change the PIN.

jas@kaka:~$ gpg-connect-agent "SCD KILLSCD" "SCD BYE" /bye

OK

ERR 67125247 Slut p fil <GPG Agent>

jas@kaka:~$ ps auxww grep -e pcsc -e scd

jas 11651 0.0 0.0 3468 1672 pts/0 R+ 21:54 0:00 grep --color=auto -e pcsc -e scd

jas@kaka:~$ gpg --card-edit

Reader ...........: 20A0:4211:FSIJ-1.2.19-5D271572:0

Application ID ...: D276000124010200FFFE5D2715720000

Application type .: OpenPGP

Version ..........: 2.0

Manufacturer .....: unmanaged S/N range

Serial number ....: 5D271572

Name of cardholder: [not set]

Language prefs ...: [not set]

Salutation .......:

URL of public key : [not set]

Login data .......: [not set]

Signature PIN ....: forced

Key attributes ...: rsa2048 rsa2048 rsa2048

Max. PIN lengths .: 127 127 127

PIN retry counter : 3 3 3

Signature counter : 0

KDF setting ......: off

Signature key ....: [none]

Encryption key....: [none]

Authentication key: [none]

General key info..: [none]

gpg/card> admin

Admin commands are allowed

gpg/card> kdf-setup

gpg/card> passwd

gpg: OpenPGP card no. D276000124010200FFFE5D2715720000 detected

1 - change PIN

2 - unblock PIN

3 - change Admin PIN

4 - set the Reset Code

Q - quit

Your selection? 3

PIN changed.

1 - change PIN

2 - unblock PIN

3 - change Admin PIN

4 - set the Reset Code

Q - quit

Your selection? q

gpg/card> name

Cardholder's surname: Josefsson

Cardholder's given name: Simon

gpg/card> lang

Language preferences: sv

gpg/card> sex

Salutation (M = Mr., F = Ms., or space): m

gpg/card> login

Login data (account name): jas

gpg/card> url

URL to retrieve public key: https://josefsson.org/key-20190320.txt

gpg/card> forcesig

gpg/card> key-attr

Changing card key attribute for: Signature key

Please select what kind of key you want:

(1) RSA

(2) ECC

Your selection? 2

Please select which elliptic curve you want:

(1) Curve 25519

(4) NIST P-384

Your selection? 1

The card will now be re-configured to generate a key of type: ed25519

Note: There is no guarantee that the card supports the requested size.

If the key generation does not succeed, please check the

documentation of your card to see what sizes are allowed.

Changing card key attribute for: Encryption key

Please select what kind of key you want:

(1) RSA

(2) ECC

Your selection? 2

Please select which elliptic curve you want:

(1) Curve 25519

(4) NIST P-384

Your selection? 1

The card will now be re-configured to generate a key of type: cv25519

Changing card key attribute for: Authentication key

Please select what kind of key you want:

(1) RSA

(2) ECC

Your selection? 2

Please select which elliptic curve you want:

(1) Curve 25519

(4) NIST P-384

Your selection? 1

The card will now be re-configured to generate a key of type: ed25519

gpg/card>

jas@kaka:~$ gpg --card-edit

Reader ...........: 20A0:4211:FSIJ-1.2.19-5D271572:0

Application ID ...: D276000124010200FFFE5D2715720000

Application type .: OpenPGP

Version ..........: 2.0

Manufacturer .....: unmanaged S/N range

Serial number ....: 5D271572

Name of cardholder: Simon Josefsson

Language prefs ...: sv

Salutation .......: Mr.

URL of public key : https://josefsson.org/key-20190320.txt

Login data .......: jas

Signature PIN ....: not forced

Key attributes ...: ed25519 cv25519 ed25519

Max. PIN lengths .: 127 127 127

PIN retry counter : 3 3 3

Signature counter : 0

KDF setting ......: on

Signature key ....: [none]

Encryption key....: [none]

Authentication key: [none]

General key info..: [none]

jas@kaka:~$

Once setup, bring out your offline machine and boot it and mount your USB stick with the offline key. The paths below will be different, and this is using a somewhat unorthodox approach of working with fresh GnuPG configuration paths that I chose for the USB stick.

jas@kaka:/media/jas/2c699cbd-b77e-4434-a0d6-0c4965864296$ cp -a gnupghome-backup-masterkey gnupghome-import-nitrokey-5D271572

jas@kaka:/media/jas/2c699cbd-b77e-4434-a0d6-0c4965864296$ gpg --homedir $PWD/gnupghome-import-nitrokey-5D271572 --edit-key B1D2BD1375BECB784CF4F8C4D73CF638C53C06BE

gpg (GnuPG) 2.2.27; Copyright (C) 2021 Free Software Foundation, Inc.

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Secret key is available.

sec ed25519/D73CF638C53C06BE

created: 2019-03-20 expired: 2019-10-22 usage: SC

trust: ultimate validity: expired

[ expired] (1). Simon Josefsson <simon@josefsson.org>

gpg> keytocard

Really move the primary key? (y/N) y

Please select where to store the key:

(1) Signature key

(3) Authentication key

Your selection? 1

sec ed25519/D73CF638C53C06BE

created: 2019-03-20 expired: 2019-10-22 usage: SC

trust: ultimate validity: expired

[ expired] (1). Simon Josefsson <simon@josefsson.org>

gpg>

Save changes? (y/N) y

jas@kaka:/media/jas/2c699cbd-b77e-4434-a0d6-0c4965864296$

At this point it is useful to confirm that the Nitrokey has the master key available and that is possible to sign statements with it, back on your regular machine:

jas@kaka:~$ gpg --card-status

Reader ...........: 20A0:4211:FSIJ-1.2.19-5D271572:0

Application ID ...: D276000124010200FFFE5D2715720000

Application type .: OpenPGP

Version ..........: 2.0

Manufacturer .....: unmanaged S/N range

Serial number ....: 5D271572

Name of cardholder: Simon Josefsson

Language prefs ...: sv

Salutation .......: Mr.

URL of public key : https://josefsson.org/key-20190320.txt

Login data .......: jas

Signature PIN ....: not forced

Key attributes ...: ed25519 cv25519 ed25519

Max. PIN lengths .: 127 127 127

PIN retry counter : 3 3 3

Signature counter : 1

KDF setting ......: on

Signature key ....: B1D2 BD13 75BE CB78 4CF4 F8C4 D73C F638 C53C 06BE

created ....: 2019-03-20 23:37:24

Encryption key....: [none]

Authentication key: [none]

General key info..: pub ed25519/D73CF638C53C06BE 2019-03-20 Simon Josefsson <simon@josefsson.org>

sec> ed25519/D73CF638C53C06BE created: 2019-03-20 expires: 2023-09-19

card-no: FFFE 5D271572

ssb> ed25519/80260EE8A9B92B2B created: 2019-03-20 expires: 2023-09-19

card-no: FFFE 42315277

ssb> ed25519/51722B08FE4745A2 created: 2019-03-20 expires: 2023-09-19

card-no: FFFE 42315277

ssb> cv25519/02923D7EE76EBD60 created: 2019-03-20 expires: 2023-09-19

card-no: FFFE 42315277

jas@kaka:~$ echo foo gpg -a --sign gpg --verify

gpg: Signature made Thu Mar 16 22:11:02 2023 CET

gpg: using EDDSA key B1D2BD1375BECB784CF4F8C4D73CF638C53C06BE

gpg: Good signature from "Simon Josefsson <simon@josefsson.org>" [ultimate]

jas@kaka:~$

Finally to retrieve and sign a key, for example Andre Heinecke s that I could confirm the OpenPGP key identifier from his business card.

jas@kaka:~$ gpg --locate-external-keys aheinecke@gnupg.com

gpg: key 1FDF723CF462B6B1: public key "Andre Heinecke <aheinecke@gnupg.com>" imported

gpg: Total number processed: 1

gpg: imported: 1

gpg: marginals needed: 3 completes needed: 1 trust model: pgp

gpg: depth: 0 valid: 2 signed: 7 trust: 0-, 0q, 0n, 0m, 0f, 2u

gpg: depth: 1 valid: 7 signed: 64 trust: 7-, 0q, 0n, 0m, 0f, 0u

gpg: next trustdb check due at 2023-05-26

pub rsa3072 2015-12-08 [SC] [expires: 2025-12-05]

94A5C9A03C2FE5CA3B095D8E1FDF723CF462B6B1

uid [ unknown] Andre Heinecke <aheinecke@gnupg.com>

sub ed25519 2017-02-13 [S]

sub ed25519 2017-02-13 [A]

sub rsa3072 2015-12-08 [E] [expires: 2025-12-05]

sub rsa3072 2015-12-08 [A] [expires: 2025-12-05]

jas@kaka:~$ gpg --edit-key "94A5C9A03C2FE5CA3B095D8E1FDF723CF462B6B1"

gpg (GnuPG) 2.2.27; Copyright (C) 2021 Free Software Foundation, Inc.

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

pub rsa3072/1FDF723CF462B6B1

created: 2015-12-08 expires: 2025-12-05 usage: SC

trust: unknown validity: unknown

sub ed25519/2978E9D40CBABA5C

created: 2017-02-13 expires: never usage: S

sub ed25519/DC74D901C8E2DD47

created: 2017-02-13 expires: never usage: A

The following key was revoked on 2017-02-23 by RSA key 1FDF723CF462B6B1 Andre Heinecke <aheinecke@gnupg.com>

sub cv25519/1FFE3151683260AB

created: 2017-02-13 revoked: 2017-02-23 usage: E

sub rsa3072/8CC999BDAA45C71F

created: 2015-12-08 expires: 2025-12-05 usage: E

sub rsa3072/6304A4B539CE444A

created: 2015-12-08 expires: 2025-12-05 usage: A

[ unknown] (1). Andre Heinecke <aheinecke@gnupg.com>

gpg> sign

pub rsa3072/1FDF723CF462B6B1

created: 2015-12-08 expires: 2025-12-05 usage: SC

trust: unknown validity: unknown

Primary key fingerprint: 94A5 C9A0 3C2F E5CA 3B09 5D8E 1FDF 723C F462 B6B1

Andre Heinecke <aheinecke@gnupg.com>

This key is due to expire on 2025-12-05.

Are you sure that you want to sign this key with your

key "Simon Josefsson <simon@josefsson.org>" (D73CF638C53C06BE)

Really sign? (y/N) y

gpg> quit

Save changes? (y/N) y

jas@kaka:~$

This is on my day-to-day machine, using the NitroKey Start with the offline key. No need to boot the old offline machine just to sign keys or extend expiry anymore! At FOSDEM 23 I managed to get at least one DD signature on my new key, and the Debian keyring maintainers accepted my Ed25519 key. Hopefully I can now finally let my 2014-era RSA3744 key expire in 2023-09-19 and not extend it any further. This should finish my transition to a simpler OpenPGP key setup, yay!

apt upgrade until they have a recent kernel.apt changelog linux-image-unsigned-$(uname -r) to see if

Revoke & rotate to new signing key (LP: #2002812) is mentioned in there to see if it

signed with the new key.shimx64.efi.signed

or (on arm64) shimaa64.efi.signed alternative. The best link needs to point to the file ending in

latest:

$ update-alternatives --display shimx64.efi.signed

shimx64.efi.signed - auto mode

link best version is /usr/lib/shim/shimx64.efi.signed.latest

link currently points to /usr/lib/shim/shimx64.efi.signed.latest

link shimx64.efi.signed is /usr/lib/shim/shimx64.efi.signed

/usr/lib/shim/shimx64.efi.signed.latest - priority 100

/usr/lib/shim/shimx64.efi.signed.previous - priority 50

dpkg-reconfigure shim-signed. You ll see in the output if the shim was updated, or you can check the output

of update-alternatives as you did above after the reconfiguration has finished.

For the out of memory issues in grub, you need grub2-signed 1.187.3~ (same binaries

as above).

~<release>.1 appended to the version| Series: | Innkeeper Chronicles #6 |

| Publisher: | NYLA Publishing |

| Copyright: | 2022 |

| ISBN: | 1-64197-239-4 |

| Format: | Kindle |

| Pages: | 440 |

As a follow-up to yesterday's post detailing my favourite works of fiction from 2022, today I'll be listing my favourite fictional works that are typically filed under classics.

Books that just missed the cut here include: E. M. Forster's A Room with a View (1908) and his later A Passage to India (1913), both gently nudged out by Forster's superb Howard's End (see below). Giuseppe Tomasi di Lampedusa's The Leopard (1958) also just missed out on a write-up here, but I can definitely recommend it to anyone interested in reading a modern Italian classic.

As a follow-up to yesterday's post detailing my favourite works of fiction from 2022, today I'll be listing my favourite fictional works that are typically filed under classics.

Books that just missed the cut here include: E. M. Forster's A Room with a View (1908) and his later A Passage to India (1913), both gently nudged out by Forster's superb Howard's End (see below). Giuseppe Tomasi di Lampedusa's The Leopard (1958) also just missed out on a write-up here, but I can definitely recommend it to anyone interested in reading a modern Italian classic.

War and Peace (1867) Leo Tolstoy It's strange to think that there is almost no point in reviewing this novel: who hasn't heard of War and Peace? What more could possibly be said about it now? Still, when I was growing up, War and Peace was always the stereotypical example of the 'impossible book', and even start it was, at best, a pointless task, and an act of hubris at worst. And so there surely exists a parallel universe in which I never have and will never will read the book... Nevertheless, let us try to set the scene. Book nine of the novel opens as follows:

On the twelfth of June, 1812, the forces of Western Europe crossed the Russian frontier and war began; that is, an event took place opposed to human reason and to human nature. Millions of men perpetrated against one another such innumerable crimes, frauds, treacheries, thefts, forgeries, issues of false money, burglaries, incendiarisms and murders as in whole centuries are not recorded in the annals of all the law courts of the world, but which those who committed them did not at the time regard as being crimes. What produced this extraordinary occurrence? What were its causes? [ ] The more we try to explain such events in history reasonably, the more unreasonable and incomprehensible they become to us.Set against the backdrop of the Napoleonic Wars and Napoleon's invasion of Russia, War and Peace follows the lives and fates of three aristocratic families: The Rostovs, The Bolkonskys and the Bezukhov's. These characters find themselves situated athwart (or against) history, and all this time, Napoleon is marching ever closer to Moscow. Still, Napoleon himself is essentially just a kind of wallpaper for a diverse set of personal stories touching on love, jealousy, hatred, retribution, naivety, nationalism, stupidity and much much more. As Elif Batuman wrote earlier this year, "the whole premise of the book was that you couldn t explain war without recourse to domesticity and interpersonal relations." The result is that Tolstoy has woven an incredibly intricate web that connects the war, noble families and the everyday Russian people to a degree that is surprising for a book started in 1865. Tolstoy's characters are probably timeless (especially the picaresque adventures and constantly changing thoughts Pierre Bezukhov), and the reader who has any social experience will immediately recognise characters' thoughts and actions. Some of this is at a 'micro' interpersonal level: for instance, take this example from the elegant party that opens the novel:

Each visitor performed the ceremony of greeting this old aunt whom not one of them knew, not one of them wanted to know, and not one of them cared about. The aunt spoke to each of them in the same words, about their health and her own and the health of Her Majesty, who, thank God, was better today. And each visitor, though politeness prevented his showing impatience, left the old woman with a sense of relief at having performed a vexatious duty and did not return to her the whole evening.But then, some of the focus of the observations are at the 'macro' level of the entire continent. This section about cities that feel themselves in danger might suffice as an example:

At the approach of danger, there are always two voices that speak with equal power in the human soul: one very reasonably tells a man to consider the nature of the danger and the means of escaping it; the other, still more reasonably, says that it is too depressing and painful to think of the danger, since it is not in man s power to foresee everything and avert the general course of events, and it is therefore better to disregard what is painful till it comes and to think about what is pleasant. In solitude, a man generally listens to the first voice, but in society to the second.And finally, in his lengthy epilogues, Tolstoy offers us a dissertation on the behaviour of large organisations, much of it through engagingly witty analogies. These epilogues actually turn out to be an oblique and sarcastic commentary on the idiocy of governments and the madness of war in general. Indeed, the thorough dismantling of the 'great man' theory of history is a common theme throughout the book:

During the whole of that period [of 1812], Napoleon, who seems to us to have been the leader of all these movements as the figurehead of a ship may seem to a savage to guide the vessel acted like a child who, holding a couple of strings inside a carriage, thinks he is driving it. [ ] Why do [we] all speak of a military genius ? Is a man a genius who can order bread to be brought up at the right time and say who is to go to the right and who to the left? It is only because military men are invested with pomp and power and crowds of sychophants flatter power, attributing to it qualities of genius it does not possess.Unlike some other readers, I especially enjoyed these diversions into the accounting and workings of history, as well as our narrow-minded way of trying to 'explain' things in a singular way:

When an apple has ripened and falls, why does it fall? Because of its attraction to the earth, because its stalk withers, because it is dried by the sun, because it grows heavier, because the wind shakes it, or because the boy standing below wants to eat it? Nothing is the cause. All this is only the coincidence of conditions in which all vital organic and elemental events occur. And the botanist who finds that the apple falls because the cellular tissue decays and so forth is equally right with the child who stands under the tree and says the apple fell because he wanted to eat it and prayed for it.Given all of these serious asides, I was also not expecting this book to be quite so funny. At the risk of boring the reader with citations, take this sarcastic remark about the ineptness of medicine men:

After his liberation, [Pierre] fell ill and was laid up for three months. He had what the doctors termed 'bilious fever.' But despite the fact that the doctors treated him, bled him and gave him medicines to drink he recovered.There is actually a multitude of remarks that are not entirely complimentary towards Russian medical practice, but they are usually deployed with an eye to the human element involved rather than simply to the detriment of a doctor's reputation "How would the count have borne his dearly loved daughter s illness had he not known that it was costing him a thousand rubles?" Other elements of note include some stunning set literary pieces, such as when Prince Andrei encounters a gnarly oak tree under two different circumstances in his life, and when Nat sha's 'Russian' soul is awakened by the strains of a folk song on the balalaika. Still, despite all of these micro- and macro-level happenings, for a long time I felt that something else was going on in War and Peace. It was difficult to put into words precisely what it was until I came across this passage by E. M. Forster:

After one has read War and Peace for a bit, great chords begin to sound, and we cannot say exactly what struck them. They do not arise from the story [and] they do not come from the episodes nor yet from the characters. They come from the immense area of Russia, over which episodes and characters have been scattered, from the sum-total of bridges and frozen rivers, forests, roads, gardens and fields, which accumulate grandeur and sonority after we have passed them. Many novelists have the feeling for place, [but] very few have the sense of space, and the possession of it ranks high in Tolstoy s divine equipment. Space is the lord of War and Peace, not time.'Space' indeed. Yes, potential readers should note the novel's great length, but the 365 chapters are actually remarkably short, so the sensation of reading it is not in the least overwhelming. And more importantly, once you become familiar with its large cast of characters, it is really not a difficult book to follow, especially when compared to the other Russian classics. My only regret is that it has taken me so long to read this magnificent novel and that I might find it hard to find time to re-read it within the next few years.

Coming Up for Air (1939) George Orwell It wouldn't be a roundup of mine without at least one entry from George Orwell, and, this year, that place is occupied by a book I hadn't haven't read in almost two decades Still, the George Bowling of Coming Up for Air is a middle-aged insurance salesman who lives in a distinctly average English suburban row house with his nuclear family. One day, after winning some money on a bet, he goes back to the village where he grew up in order to fish in a pool he remembers from thirty years before. Less important than the plot, however, is both the well-observed remarks and scathing criticisms that Bowling has of the town he has returned to, combined with an ominous sense of foreboding before the Second World War breaks out. At several times throughout the book, George's placid thoughts about his beloved carp pool are replaced by racing, anxious thoughts that overwhelm his inner peace:

War is coming. In 1941, they say. And there'll be plenty of broken crockery, and little houses ripped open like packing-cases, and the guts of the chartered accountant's clerk plastered over the piano that he's buying on the never-never. But what does that kind of thing matter, anyway? I'll tell you what my stay in Lower Binfield had taught me, and it was this. IT'S ALL GOING TO HAPPEN. All the things you've got at the back of your mind, the things you're terrified of, the things that you tell yourself are just a nightmare or only happen in foreign countries. The bombs, the food-queues, the rubber truncheons, the barbed wire, the coloured shirts, the slogans, the enormous faces, the machine-guns squirting out of bedroom windows. It's all going to happen. I know it - at any rate, I knew it then. There's no escape. Fight against it if you like, or look the other way and pretend not to notice, or grab your spanner and rush out to do a bit of face-smashing along with the others. But there's no way out. It's just something that's got to happen.Already we can hear psychological madness that underpinned the Second World War. Indeed, there is no great story in Coming Up For Air, no wonderfully empathetic characters and no revelations or catharsis, so it is impressive that I was held by the descriptions, observations and nostalgic remembrances about life in modern Lower Binfield, its residents, and how it has changed over the years. It turns out, of course, that George's beloved pool has been filled in with rubbish, and the village has been perverted by modernity beyond recognition. And to cap it off, the principal event of George's holiday in Lower Binfield is an accidental bombing by the British Royal Air Force. Orwell is always good at descriptions of awful food, and this book is no exception:

The frankfurter had a rubber skin, of course, and my temporary teeth weren't much of a fit. I had to do a kind of sawing movement before I could get my teeth through the skin. And then suddenly pop! The thing burst in my mouth like a rotten pear. A sort of horrible soft stuff was oozing all over my tongue. But the taste! For a moment I just couldn't believe it. Then I rolled my tongue around it again and had another try. It was fish! A sausage, a thing calling itself a frankfurter, filled with fish! I got up and walked straight out without touching my coffee. God knows what that might have tasted of.Many other tell-tale elements of Orwell's fictional writing are in attendance in this book as well, albeit worked out somewhat less successfully than elsewhere in his oeuvre. For example, the idea of a physical ailment also serving as a metaphor is present in George's false teeth, embodying his constant preoccupation with his ageing. (Readers may recall Winston Smith's varicose ulcer representing his repressed humanity in Nineteen Eighty-Four). And, of course, we have a prematurely middle-aged protagonist who almost but not quite resembles Orwell himself. Given this and a few other niggles (such as almost all the women being of the typical Orwell 'nagging wife' type), it is not exactly Orwell's magnum opus. But it remains a fascinating historical snapshot of the feeling felt by a vast number of people just prior to the Second World War breaking out, as well as a captivating insight into how the process of nostalgia functions and operates.

Howards End (1910) E. M. Forster Howards End begins with the following sentence:

One may as well begin with Helen s letters to her sister.In fact, "one may as well begin with" my own assumptions about this book instead. I was actually primed to consider Howards End a much more 'Victorian' book: I had just finished Virginia Woolf's Mrs Dalloway and had found her 1925 book at once rather 'modern' but also very much constrained by its time. I must have then unconsciously surmised that a book written 15 years before would be even more inscrutable, and, with its Victorian social mores added on as well, Howards End would probably not undress itself so readily in front of the reader. No doubt there were also the usual expectations about 'the classics' as well. So imagine my surprise when I realised just how inordinately affable and witty Howards End turned out to be. It doesn't have that Wildean shine of humour, of course, but it's a couple of fields over in the English countryside, perhaps abutting the more mordant social satires of the earlier George Orwell novels (see Coming Up for Air above). But now let us return to the story itself. Howards End explores class warfare, conflict and the English character through a tale of three quite different families at the beginning of the twentieth century: the rich Wilcoxes; the gentle & idealistic Schlegels; and the lower-middle class Basts. As the Bloomsbury Group Schlegel sisters desperately try to help the Basts and educate the rich but close-minded Wilcoxes, the three families are drawn ever closer and closer together. Although the whole story does, I suppose, revolve around the house in the title (which is based on the Forster's own childhood home), Howards End is perhaps best described as a comedy of manners or a novel that shows up the hypocrisy of people and society. In fact, it is surprising how little of the story actually takes place in the eponymous house, with the overwhelming majority of the first half of the book taking place in London. But it is perhaps more illuminating to remark that the Howards End of the book is a house that the Wilcoxes who own it at the start of the novel do not really need or want. What I particularly liked about Howards End is how the main character's ideals alter as they age, and subsequently how they find their lives changing in different ways. Some of them find themselves better off at the end, others worse. And whilst it is also surprisingly funny, it still manages to trade in heavier social topics as well. This is apparent in the fact that, although the characters themselves are primarily in charge of their own destinies, their choices are still constrained by the changing world and shifting sense of morality around them. This shouldn't be too surprising: after all, Forster's novel was published just four years before the Great War, a distinctly uncertain time. Not for nothing did Virginia Woolf herself later observe that "on or about December 1910, human character changed" and that "all human relations have shifted: those between masters and servants, husbands and wives, parents and children." This process can undoubtedly be seen rehearsed throughout Forster's Howards End, and it's a credit to the author to be able to capture it so early on, if not even before it was widespread throughout Western Europe. I was also particularly taken by Forster's fertile use of simile. An extremely apposite example can be found in the description Tibby Schlegel gives of his fellow Cambridge undergraduates. Here, Timmy doesn't want to besmirch his lofty idealisation of them with any banal specificities, and wishes that the idea of them remain as ideal Platonic forms instead. Or, as Forster puts it, to Timmy it is if they are "pictures that must not walk out of their frames." Wilde, at his most weakest, is 'just' style, but Forster often deploys his flair for a deeper effect. Indeed, when you get to the end of this section mentioning picture frames, you realise Forster has actually just smuggled into the story a failed attempt on Tibby's part to engineer an anonymous homosexual encounter with another undergraduate. It is a credit to Forster's sleight-of-hand that you don't quite notice what has just happened underneath you and that the books' reticence to honestly describe what has happened is thus structually analogus Tibby's reluctance to admit his desires to himself. Another layer to the character of Tibby (and the novel as a whole) is thereby introduced without the imposition of clumsy literary scaffolding. In a similar vein, I felt very clever noticing the arch reference to Debussy's Pr lude l'apr s-midi d'un faune until I realised I just fell into the trap Forster set for the reader in that I had become even more like Tibby in his pseudo-scholarly views on classical music. Finally, I enjoyed that each chapter commences with an ironic and self-conscious bon mot about society which is only slightly overblown for effect. Particularly amusing are the ironic asides on "women" that run through the book, ventriloquising the narrow-minded views of people like the Wilcoxes. The omniscient and amiable narrator of the book also recalls those ironically distant voiceovers from various French New Wave films at times, yet Forster's narrator seems to have bigger concerns in his mordant asides: Forster seems to encourage some sympathy for all of the characters even the more contemptible ones at their worst moments. Highly recommended, as are Forster's A Room with a View (1908) and his slightly later A Passage to India (1913).

The Good Soldier (1915) Ford Madox Ford The Good Soldier starts off fairly simply as the narrator's account of his and his wife's relationship with some old friends, including the eponymous 'Good Soldier' of the book's title. It's an experience to read the beginning of this novel, as, like any account of endless praise of someone you've never met or care about, the pages of approving remarks about them appear to be intended to wash over you. Yet as the chapters of The Good Soldier go by, the account of the other characters in the book gets darker and darker. Although the author himself is uncritical of others' actions, your own critical faculties are slowgrly brought into play, and you gradully begin to question the narrator's retelling of events. Our narrator is an unreliable narrator in the strict sense of the term, but with the caveat that he is at least is telling us everything we need to know to come to our own conclusions. As the book unfolds further, the narrator's compromised credibility seems to infuse every element of the novel even the 'Good' of the book's title starts to seem like a minor dishonesty, perhaps serving as the inspiration for the irony embedded in the title of The 'Great' Gatsby. Much more effectively, however, the narrator's fixations, distractions and manner of speaking feel very much part of his dissimulation. It sometimes feels like he is unconsciously skirting over the crucial elements in his tale, exactly like one does in real life when recounting a story containing incriminating ingredients. Indeed, just how much the narrator is conscious of his own concealment is just one part of what makes this such an interesting book: Ford Madox Ford has gifted us with enough ambiguity that it is also possible that even the narrator cannot find it within himself to understand the events of the story he is narrating. It was initially hard to believe that such a carefully crafted analysis of a small group of characters could have been written so long ago, and despite being fairly easy to read, The Good Soldier is an almost infinitely subtle book even the jokes are of the subtle kind and will likely get a re-read within the next few years.

Anna Karenina (1878) Leo Tolstoy There are many similar themes running through War and Peace (reviewed above) and Anna Karenina. Unrequited love; a young man struggling to find a purpose in life; a loving family; an overwhelming love of nature and countless fascinating observations about the minuti of Russian society. Indeed, rather than primarily being about the eponymous Anna, Anna Karenina provides a vast panorama of contemporary life in Russia and of humanity in general. Nevertheless, our Anna is a sophisticated woman who abandons her empty existence as the wife of government official Alexei Karenin, a colourless man who has little personality of his own, and she turns to a certain Count Vronsky in order to fulfil her passionate nature. Needless to say, this results in tragic consequences as their (admittedly somewhat qualified) desire to live together crashes against the rocks of reality and Russian society. Parallel to Anna's narrative, though, Konstantin Levin serves as the novel's alter-protagonist. In contrast to Anna, Levin is a socially awkward individual who straddles many schools of thought within Russia at the time: he is neither a free-thinker (nor heavy-drinker) like his brother Nikolai, and neither is he a bookish intellectual like his half-brother Serge. In short, Levin is his own man, and it is generally agreed by commentators that he is Tolstoy's surrogate within the novel. Levin tends to come to his own version of an idea, and he would rather find his own way than adopt any prefabricated view, even if confusion and muddle is the eventual result. In a roughly isomorphic fashion then, he resembles Anna in this particular sense, whose story is a counterpart to Levin's in their respective searches for happiness and self-actualisation. Whilst many of the passionate and exciting passages are told on Anna's side of the story (I'm thinking horse race in particular, as thrilling as anything in cinema ), many of the broader political thoughts about the nature of the working classes are expressed on Levin's side instead. These are stirring and engaging in their own way, though, such as when he joins his peasants to mow the field and seems to enter the nineteenth-century version of 'flow':

The longer Levin mowed, the more often he felt those moments of oblivion during which it was no longer his arms that swung the scythe, but the scythe itself that lent motion to his whole body, full of life and conscious of itself, and, as if by magic, without a thought of it, the work got rightly and neatly done on its own. These were the most blissful moments.Overall, Tolstoy poses no didactic moral message towards any of the characters in Anna Karenina, and merely invites us to watch rather than judge. (Still, there is a hilarious section that is scathing of contemporary classical music, presaging many of the ideas found in Tolstoy's 1897 What is Art?). In addition, just like the earlier War and Peace, the novel is run through with a number of uncannily accurate observations about daily life:

Anna smiled, as one smiles at the weaknesses of people one loves, and, putting her arm under his, accompanied him to the door of the study.... as well as the usual sprinkling of Tolstoy's sardonic humour ("No one is pleased with his fortune, but everyone is pleased with his wit."). Fyodor Dostoyevsky, the other titan of Russian literature, once described Anna Karenina as a "flawless work of art," and if you re only going to read one Tolstoy novel in your life, it should probably be this one.

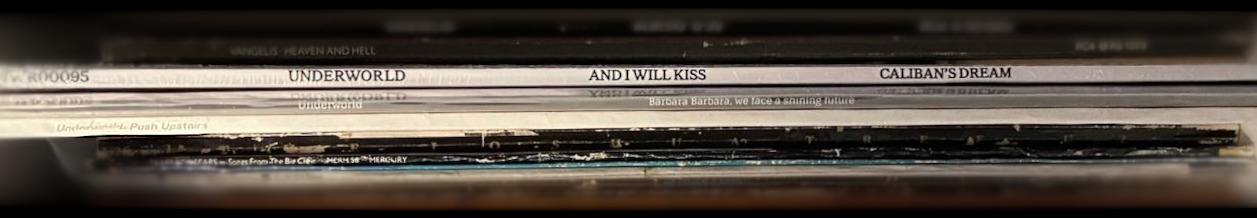

One of my main musical 'discoveries' in 2022 was British electronic band

Underworld.

I m super late to the party. Underworld s commercial high point was the mid

nineties. And I was certainly aware of them then: the use of

Born Slippy .NUXX in 1996 s Trainspotting soundtrack was ubiquitous, but it

didn t grab me.

In more recent years my colleague and friend Andrew Dinn (with whom I enjoyed

many pre-pandemic conversations about music) enthusiastically advocated for

Underworld (and furnished me with some rarities). This started to get them

under my skin.

It took a bit longer for me to truly get them, though, and the final straw was

revisiting their BBC 6 Music Festival performance from

2016

(with Rez and it's companion piece "Cowgirl" standing out)

One of my main musical 'discoveries' in 2022 was British electronic band

Underworld.

I m super late to the party. Underworld s commercial high point was the mid

nineties. And I was certainly aware of them then: the use of

Born Slippy .NUXX in 1996 s Trainspotting soundtrack was ubiquitous, but it

didn t grab me.

In more recent years my colleague and friend Andrew Dinn (with whom I enjoyed

many pre-pandemic conversations about music) enthusiastically advocated for

Underworld (and furnished me with some rarities). This started to get them

under my skin.

It took a bit longer for me to truly get them, though, and the final straw was

revisiting their BBC 6 Music Festival performance from

2016

(with Rez and it's companion piece "Cowgirl" standing out)

So where to start? There s something compelling about their whole catalogue.

This is a group with which you can go deep, if you wish.

The only album which hasn t grabbed me is 100 days off and it s probably only

a matter of time before it does (Andrew advocates the Extended Ansum Edition

bootleg here). Here are four career-spanning personal highlights:

So where to start? There s something compelling about their whole catalogue.

This is a group with which you can go deep, if you wish.

The only album which hasn t grabbed me is 100 days off and it s probably only

a matter of time before it does (Andrew advocates the Extended Ansum Edition

bootleg here). Here are four career-spanning personal highlights:

apt install --yes gdisk zfs-dkms zfs zfs-initramfs zfsutils-linux

echo REMAKE_INITRD=yes > /etc/dkms/zfs.conf

/dev/sdc with:

sgdisk --zap-all /dev/sdc

sgdisk -a1 -n1:24K:+1000K -t1:EF02 /dev/sdc

sgdisk -n2:1M:+512M -t2:EF00 /dev/sdc

sgdisk -n3:0:+1G -t3:BF01 /dev/sdc

sgdisk -n4:0:0 -t4:BF00 /dev/sdc

root@curie:/home/anarcat# sgdisk -p /dev/sdc

Disk /dev/sdc: 1953525168 sectors, 931.5 GiB

Model: ESD-S1C

Sector size (logical/physical): 512/512 bytes

Disk identifier (GUID): [REDACTED]

Partition table holds up to 128 entries

Main partition table begins at sector 2 and ends at sector 33

First usable sector is 34, last usable sector is 1953525134

Partitions will be aligned on 16-sector boundaries

Total free space is 14 sectors (7.0 KiB)

Number Start (sector) End (sector) Size Code Name

1 48 2047 1000.0 KiB EF02

2 2048 1050623 512.0 MiB EF00

3 1050624 3147775 1024.0 MiB BF01

4 3147776 1953525134 930.0 GiB BF00

smartctl command should tell us the sector size as well:

root@curie:~# smartctl -i /dev/sdb -qnoserial

smartctl 7.2 2020-12-30 r5155 [x86_64-linux-5.10.0-14-amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Western Digital Black Mobile

Device Model: WDC WD10JPLX-00MBPT0

Firmware Version: 01.01H01

User Capacity: 1 000 204 886 016 bytes [1,00 TB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Form Factor: 2.5 inches

Device is: In smartctl database [for details use: -P show]

ATA Version is: ATA8-ACS T13/1699-D revision 6

SATA Version is: SATA 3.0, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Tue May 17 13:33:04 2022 EDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

ashift value

correctly. We're going to go ahead the SSD drive has the common 4KB

settings, which means ashift=12.

Note here that we are not creating a separate partition for

swap. Swap on ZFS volumes (AKA "swap on ZVOL") can trigger lockups and

that issue is still not fixed upstream. Ubuntu recommends using a

separate partition for swap instead. But since this is "just" a

workstation, we're betting that we will not suffer from this problem,

after hearing a report from another Debian developer running this

setup on their workstation successfully.

We do not recommend this setup though. In fact, if I were to redo this

partition scheme, I would probably use LUKS encryption and setup a

dedicated swap partition, as I had problems with ZFS encryption as

well.

zpool create \

-o cachefile=/etc/zfs/zpool.cache \

-o ashift=12 -d \

-o feature@async_destroy=enabled \

-o feature@bookmarks=enabled \

-o feature@embedded_data=enabled \

-o feature@empty_bpobj=enabled \

-o feature@enabled_txg=enabled \

-o feature@extensible_dataset=enabled \

-o feature@filesystem_limits=enabled \

-o feature@hole_birth=enabled \

-o feature@large_blocks=enabled \

-o feature@lz4_compress=enabled \

-o feature@spacemap_histogram=enabled \

-o feature@zpool_checkpoint=enabled \

-O acltype=posixacl -O canmount=off \

-O compression=lz4 \

-O devices=off -O normalization=formD -O relatime=on -O xattr=sa \

-O mountpoint=/boot -R /mnt \

bpool /dev/sdc3

zpool create \

-o ashift=12 \

-O encryption=on -O keylocation=prompt -O keyformat=passphrase \

-O acltype=posixacl -O xattr=sa -O dnodesize=auto \

-O compression=zstd \

-O relatime=on \

-O canmount=off \

-O mountpoint=/ -R /mnt \

rpool /dev/sdc4

-o ashift=12: mentioned above, 4k sector size-O encryption=on -O keylocation=prompt -O keyformat=passphrase:

encryption, prompt for a password, default algorithm is

aes-256-gcm, explicit in the guide, made implicit here-O acltype=posixacl -O xattr=sa: enable ACLs, with better

performance (not enabled by default)-O dnodesize=auto: related to extended attributes, less

compatibility with other implementations-O compression=zstd: enable zstd compression, can be

disabled/enabled by dataset to with zfs set compression=off

rpool/example-O relatime=on: classic atime optimisation, another that could

be used on a busy server is atime=off-O canmount=off: do not make the pool mount automatically with

mount -a?-O mountpoint=/ -R /mnt: mount pool on / in the future, but

/mnt for now-O normalization=formD: normalize file names on comparisons (not

storage), implies utf8only=on, which is a bad idea (and

effectively meant my first sync failed to copy some files,

including this folder from a supysonic checkout). and this

cannot be changed after the filesystem is created. bad, bad, bad.[...] any error can be detected, but cannot be corrected. This sounds like an acceptable compromise, but its actually not. The reason its not is that ZFS' metadata cannot be allowed to be corrupted. If it is it is likely the zpool will be impossible to mount (and will probably crash the system once the corruption is found). So a couple of bad sectors in the right place will mean that all data on the zpool will be lost. Not some, all. Also there's no ZFS recovery tools, so you cannot recover any data on the drives.Compared with (say) ext4, where a single disk error can recovered, this is pretty bad. But we are ready to live with this with the idea that we'll have hourly offline snapshots that we can easily recover from. It's trade-off. Also, we're running this on a NVMe/M.2 drive which typically just blinks out of existence completely, and doesn't "bit rot" the way a HDD would. Also, the FreeBSD handbook quick start doesn't have any warnings about their first example, which is with a single disk. So I am reassured at least.

ROOT and BOOT

zfs create -o canmount=off -o mountpoint=none rpool/ROOT &&

zfs create -o canmount=off -o mountpoint=none bpool/BOOT

zfs create -o canmount=noauto -o mountpoint=/ rpool/ROOT/debian &&

zfs mount rpool/ROOT/debian &&

zfs create -o mountpoint=/boot bpool/BOOT/debian

debian name here is because we could technically have

multiple operating systems with the same underlying datasets. zfs create rpool/home &&

zfs create -o mountpoint=/root rpool/home/root &&

chmod 700 /mnt/root &&

zfs create rpool/var

zfs create -o com.sun:auto-snapshot=false rpool/var/cache &&

zfs create -o com.sun:auto-snapshot=false rpool/var/tmp &&

chmod 1777 /mnt/var/tmp

zfs create -o canmount=off rpool/var/lib &&

zfs create -o com.sun:auto-snapshot=false rpool/var/lib/docker

rpool/var/lib to

create rpool/var/lib/docker otherwise we get this error:

cannot create 'rpool/var/lib/docker': parent does not exist

/mnt/var/lib doesn't fix that

problem. In fact, it makes things even more confusing because an

existing directory shadows a mountpoint, which is the opposite of

how things normally work.

Also note that you will probably need to change storage driver in

Docker, see the zfs-driver documentation for details but,

basically, I did:

echo ' "storage-driver": "zfs" ' > /etc/docker/daemon.json

printf '[storage]\ndriver = "zfs"\n' > /etc/containers/storage.conf

tmpfs for /run:

mkdir /mnt/run &&

mount -t tmpfs tmpfs /mnt/run &&

mkdir /mnt/run/lock

/srv, as that's the HDD stuff.

Also mount the EFI partition:

mkfs.fat -F 32 /dev/sdc2 &&

mount /dev/sdc2 /mnt/boot/efi/

/mnt. It should look

like this:

root@curie:~# LANG=C df -h -t zfs -t vfat

Filesystem Size Used Avail Use% Mounted on

rpool/ROOT/debian 899G 384K 899G 1% /mnt

bpool/BOOT/debian 832M 123M 709M 15% /mnt/boot

rpool/home 899G 256K 899G 1% /mnt/home

rpool/home/root 899G 256K 899G 1% /mnt/root

rpool/var 899G 384K 899G 1% /mnt/var

rpool/var/cache 899G 256K 899G 1% /mnt/var/cache

rpool/var/tmp 899G 256K 899G 1% /mnt/var/tmp

rpool/var/lib/docker 899G 256K 899G 1% /mnt/var/lib/docker

/dev/sdc2 511M 4.0K 511M 1% /mnt/boot/efi

for fs in /boot/ /boot/efi/ / /home/; do

echo "syncing $fs to /mnt$fs..." &&

rsync -aSHAXx --info=progress2 --delete $fs /mnt$fs

done

mount -l -t ext4,btrfs,vfat awk ' print $3 '

/srv as it's on a different disk.

On the first run, we had:

root@curie:~# for fs in /boot/ /boot/efi/ / /home/; do

echo "syncing $fs to /mnt$fs..." &&

rsync -aSHAXx --info=progress2 $fs /mnt$fs

done

syncing /boot/ to /mnt/boot/...

0 0% 0.00kB/s 0:00:00 (xfr#0, to-chk=0/299)

syncing /boot/efi/ to /mnt/boot/efi/...

16,831,437 100% 184.14MB/s 0:00:00 (xfr#101, to-chk=0/110)

syncing / to /mnt/...

28,019,293,280 94% 47.63MB/s 0:09:21 (xfr#703710, ir-chk=6748/839220)rsync: [generator] delete_file: rmdir(var/lib/docker) failed: Device or resource busy (16)

could not make way for new symlink: var/lib/docker

34,081,267,990 98% 50.71MB/s 0:10:40 (xfr#736577, to-chk=0/867732)

rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1333) [sender=3.2.3]

syncing /home/ to /mnt/home/...

rsync: [sender] readlink_stat("/home/anarcat/.fuse") failed: Permission denied (13)

24,456,268,098 98% 68.03MB/s 0:05:42 (xfr#159867, ir-chk=6875/172377)

file has vanished: "/home/anarcat/.cache/mozilla/firefox/s2hwvqbu.quantum/cache2/entries/B3AB0CDA9C4454B3C1197E5A22669DF8EE849D90"

199,762,528,125 93% 74.82MB/s 0:42:26 (xfr#1437846, ir-chk=1018/1983979)rsync: [generator] recv_generator: mkdir "/mnt/home/anarcat/dist/supysonic/tests/assets/\#346" failed: Invalid or incomplete multibyte or wide character (84)

*** Skipping any contents from this failed directory ***

315,384,723,978 96% 76.82MB/s 1:05:15 (xfr#2256473, to-chk=0/2993950)

rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1333) [sender=3.2.3]

utf8only feature might not be such a bad

idea. At this point, the procedure was restarted all the way back to

"Creating pools", after unmounting all ZFS filesystems (umount

/mnt/run /mnt/boot/efi && umount -t zfs -a) and destroying the pool,

which, surprisingly, doesn't require any confirmation (zpool destroy

rpool).

The second run was cleaner:

root@curie:~# for fs in /boot/ /boot/efi/ / /home/; do

echo "syncing $fs to /mnt$fs..." &&

rsync -aSHAXx --info=progress2 --delete $fs /mnt$fs

done

syncing /boot/ to /mnt/boot/...

0 0% 0.00kB/s 0:00:00 (xfr#0, to-chk=0/299)

syncing /boot/efi/ to /mnt/boot/efi/...

0 0% 0.00kB/s 0:00:00 (xfr#0, to-chk=0/110)

syncing / to /mnt/...

28,019,033,070 97% 42.03MB/s 0:10:35 (xfr#703671, ir-chk=1093/833515)rsync: [generator] delete_file: rmdir(var/lib/docker) failed: Device or resource busy (16)

could not make way for new symlink: var/lib/docker

34,081,807,102 98% 44.84MB/s 0:12:04 (xfr#736580, to-chk=0/867723)

rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1333) [sender=3.2.3]

syncing /home/ to /mnt/home/...

rsync: [sender] readlink_stat("/home/anarcat/.fuse") failed: Permission denied (13)

IO error encountered -- skipping file deletion

24,043,086,450 96% 62.03MB/s 0:06:09 (xfr#151819, ir-chk=15117/172571)

file has vanished: "/home/anarcat/.cache/mozilla/firefox/s2hwvqbu.quantum/cache2/entries/4C1FDBFEA976FF924D062FB990B24B897A77B84B"

315,423,626,507 96% 67.09MB/s 1:14:43 (xfr#2256845, to-chk=0/2994364)

rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1333) [sender=3.2.3]

anarcat@curie:~$ lsusb -tv head -4

/: Bus 02.Port 1: Dev 1, Class=root_hub, Driver=xhci_hcd/6p, 5000M

ID 1d6b:0003 Linux Foundation 3.0 root hub

__ Port 1: Dev 4, If 0, Class=Mass Storage, Driver=uas, 5000M

ID 0b05:1932 ASUSTek Computer, Inc.

shutdown now, but it seems like the systemd switch broke that,

so now you can reboot into grub and pick the "recovery"

option. Alternatively, you might try systemctl rescue, as I found

out.

I also wanted to copy the drive over to another new NVMe drive, but

that failed: it looks like the USB controller I have doesn't work with

older, non-NVME drives.

mount --rbind /dev /mnt/dev &&

mount --rbind /proc /mnt/proc &&

mount --rbind /sys /mnt/sys &&

chroot /mnt /bin/bash

zpool.cache destruction:

cat > /etc/systemd/system/zfs-import-bpool.service <<EOF

[Unit]

DefaultDependencies=no

Before=zfs-import-scan.service

Before=zfs-import-cache.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/sbin/zpool import -N -o cachefile=none bpool

# Work-around to preserve zpool cache:

ExecStartPre=-/bin/mv /etc/zfs/zpool.cache /etc/zfs/preboot_zpool.cache

ExecStartPost=-/bin/mv /etc/zfs/preboot_zpool.cache /etc/zfs/zpool.cache

[Install]

WantedBy=zfs-import.target

EOF

systemctl enable zfs-import-bpool.service

/etc/fstab and /etc/crypttab to only contain

references to the legacy filesystems (/srv is still BTRFS!).

If we don't already have a tmpfs defined in /etc/fstab:

ln -s /usr/share/systemd/tmp.mount /etc/systemd/system/ &&

systemctl enable tmp.mount

grub-probe /boot grep -q zfs &&

update-initramfs -c -k all &&

sed -i 's,GRUB_CMDLINE_LINUX.*,GRUB_CMDLINE_LINUX="root=ZFS=rpool/ROOT/debian",' /etc/default/grub &&

update-grub

dpkg-reconfigure grub-pc

grub-install --target=x86_64-efi --efi-directory=/boot/efi --bootloader-id=debian --recheck --no-floppy

mkdir /etc/zfs/zfs-list.cache

touch /etc/zfs/zfs-list.cache/bpool

touch /etc/zfs/zfs-list.cache/rpool

zed -F &

cat /etc/zfs/zfs-list.cache/bpool

cat /etc/zfs/zfs-list.cache/rpool

fg

Press Ctrl-C.

/mnt:

sed -Ei "s /mnt/? / " /etc/zfs/zfs-list.cache/*